Getting Stock Prices using API Calls

Using data in your financial endeavors is a integral part of the analysis process. You can almost think of data as the lifeblood of finance. Without data, financial models are useless. But there is so much financial data available? How do you get access to it? This article will show you one way of getting stock prices using API calls.

Introduction

There are no two ways about it. Data and finance go hand-in-hand. They always have. Financial markets usually are early adopters of technology, because, let's face it - companies in this industry have money, and lot's of it!

If you are active in finance, even for your own purposes, you'll eventually need to find your way around obtaining and using data. Doing so often requires coding.

Many financial mavens use Visual Basic for Applications (VBA) inside Microsoft Excel. This is a programming language that is integrated directly into the product. These individuals are usually quite proficient with the language.

These financial mavens have also gotten quite good at wrangling VBA to make it do what they want. But the language is not really meant for industrial use, especially with the growing supply of data.

You can certainly use VBA for your coding and it probably will serve your purposes. However, I am seeing an industry trend that is moving away from VBA in favor of a programming language called Python.

Why Use Python?

I am certain that die hard users of Excel and VBA will argue that VBA is here to stay. To a point, I agree. Excel and VBA aren't going away anytime soon. However, Excel is not equipped to handle the exponentially growing data capture that is occuring in the world.

The current version of Excel supports one million rows. Many data sets can eat that one million rows for breakfast, if you know what I mean. Many data sets contain considerably more rows and are considerably more diversified in data types, file types, etc.

Python too, has limitations. It can handle up to the limits of the memory of your computer. But if you know Python, you can get around this limitation by running your code online. Cloud providers and web-based compilers/interpreters can run code on high-end machines while making it available to the average person.

Did you know that you can use IBM's supercomputer Watson? It's the same computer that beat the best players on Jeopardy! You simply sign up and choose a subscription and you can get started immediately.

I will go out on a limb and predict that within a few years, Python will be intergrated into Excel, just as is VBA. But we'll see if that happens.

I guess you can tell that I am choosing to use Python to access data from a financial data provider. I also believe you should consider using Python yourself as there are many libraries that it supports that can do all kinds of fun stuff with finance. However, this won't be a tutorial on how to program in Python, nor on financial libraries that you can obtain online. There are plenty of resources online that can help you with this.

What Is an API?

Data vendors often create programmatic libraries of their data that the make available to subscribers. This programmatic access is usually referred to as an Application Programming Interface, or API. Many APIs are web based, which makes it accessible to anyone with a web connection.

Of course, you probably will need an access key given by the company to ensure you don't abuse the privilege when using the data. Depending on the data provider, this is usually free of charge.

Data Provider Tiingo Selected for this Tutorial

Tiingo is a financial data provider that offers a few subscription levels (free and paid) of data access. One of those tiers is free of charge. I chose Tiingo because it is easy to use and it provides data for free. The company does offer more options for people willing to pay, but for the purposes of this tutorial, the free version will work fine.

You will need to sign up and obtain an access key, which the company calls a token. You may want to store that token in a secure location. If it gets into the wrong hands, your data access will be limited or even exhausted. Luckily, if you discover that someone is using your token, you can simply generate a new one, or ask the company for a new token.

Tiingo uses a concept known as REST API which for all intents and purposes means web-based access. In other words, you send URL (web address) to their API engine and if you use the proper parameters, the engine will send you back the requested data. There is a bit more to it than that, but that is the basic idea.

How and Where to Code This

Once you have access to Tiingo, log into your account. You'll need to obtain your token (also called an API Key). The company also has documentation on its website that shows you how to make valid calls to access the data. Note that the calls are all HTML based (which is the language of a web page). This means that any computer language capable of accessing web pages can be used with Tiingo.

Many companies are preferring this method (REST API) for API calls for its language-agnostic capabilities. Before REST API, data providers would need to create wrappers for each computer language it chose to support. You can see how this could be a nightmare whenever major changes were implemented. With REST API, the company need only change the internal workings of the API when changes are necessary. The API calls using whatever language suits the caller will usually remain the same.

If you have never programmed before, you are probably going to be lost with this tutorial. If you are an experienced coder, but don't know Python, you should be able to pick it up pretty quickly. Either way, I will include resources to help with learning Python and also individual concepts where applicable.

Here is what you'll need to know for this tutorial:

- Basics of Python

- How to Import Packages (and if necessary, install them)

- How to Create Function Calls with Parameters

- Knowledge of for loops

What You Will Learn in This Tutorial:

- How to Assemble an API Call from Tiingo

- Using the requests() call from import requests

- Converting the JSON response (from the requests) call into something is useable

- Next Steps After Obtaining the Data

What You Will Need to Run This Code

You will need a Python interpreter. This used to require downloading from a Python vendor and installing it on a local machine. For this tutorial, you can use Google's Colab option:

https://colab.research.google.com/

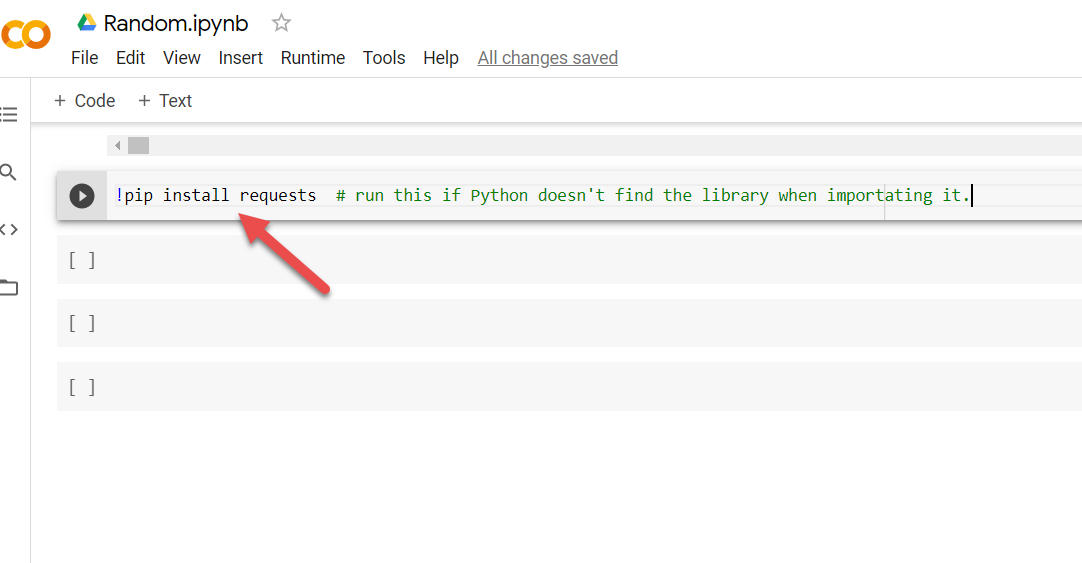

This way, you won't need to install any compilers or interpreters. The Google option should already have the necessary libraries loaded. If not, you'll need to run:

!pip install requests

You can call this directly in the Jupyter Notebook cell as follows:

Note: to use Google's Python interpreter, you will need to sign in to your Google account. If you have YouTube account or a Gmail account, you already have a Google account and you can use that to sign in.

Sequence of Code

Before showing the code snippets, I'll describe a high level overview of how we'll structure the code.

- Import Requests (install if not already)

- Create a function called get_stock_data(symbol, start, end) - this function will assemble the proper URL to call Tiingo

- Create a function called decode_response, which will be responsible from turning the json returned from get_stock_data into a format that is easier to use (arrays).

- Print out the results of the calls

Anatomy of a REST API URL

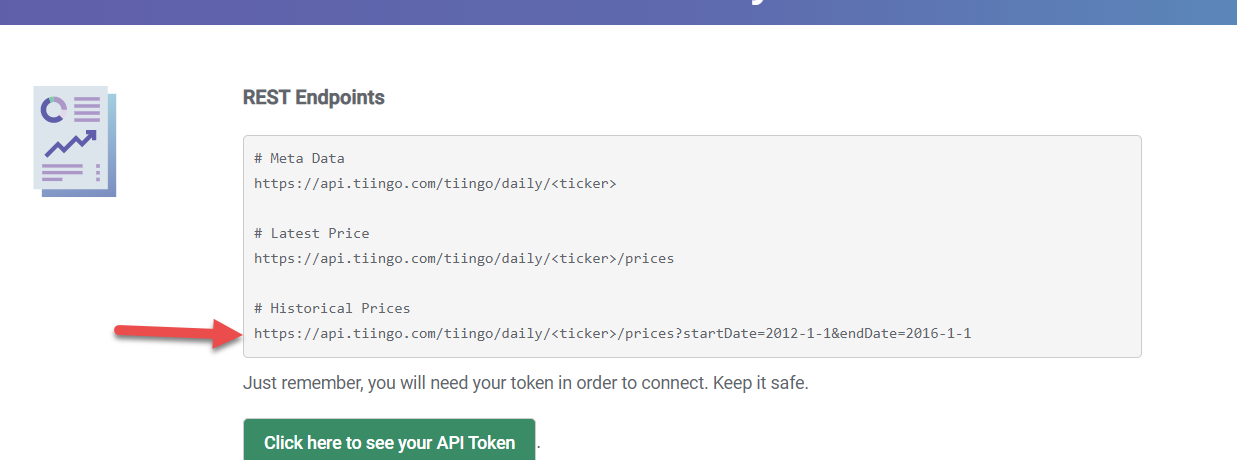

Although it's impossible to generalize REST API urls, we can use the example from Tiingo's website to show how its API call would be made for historical stock prices. The URL must be assembled into the form shown here:

NOTE: If it's difficult to see the image, you can see the same page from the documentation:

https://api.tiingo.com/documentation/end-of-day

The base part of the URL will contain the following:

https://api.tiingo.com/tiingo

Then, the next shows the frequency of data being asked for, in this case daily prices:

/daily/

Next, we'll replace the <ticker> with the actual ticker of prices we want to obtain:

<ticker> # Replace this with the stock symbol (MSFT) or whatever

Then, we use the prices? to indicate that we want to retrieve price data:

/prices?

Finally, we pass in the parameters:

startDate=2012-1-1&endDate=2016-1-1

One parameter that is missing is the token. This is something that must be added for the code to work:

https://api.tiingo.com/tiingo/daily/<ticker>/prices?startDate=2012-1-1&endDate=2016-1-1&token={your token here}NOTE: the token should be placed in quotes and the brackets above should NOT be included.

Is it necessary to break up the REST API url? No, but it just makes it easier to fill in the details that we need to supply to the API call. Remember, running the URL with the word <ticker> in it will fail. We need to replace <ticker> with the actual stock symbol. By breaking up the string, we can inject whichever values we need to replace and then reassemble the string to make the function call.

This is an important point to remember. For the API calls to work, the url that we pass must be in the correct format with all the proper replacement strings inserted.

Debugging Tip!

After you have reassembled the string in its entirety (hopefully with all the correct replacements), print it out, copy it into your browser and run it. If the string was assembled correctly, the stock price history data (or whatever metrics you are shooting for) will be shown on the screen. If not, you'll get an error!

Code

When typing in the code, don't forget to use the proper replacements, like the token. You'll need to obtain your token from Tiingo and then make sure it is part of the string.

Import requests

# Create the function retrieve_historical_prices

def retrieve_historical_prices(symbol, start_date, end_date):

base_url = "https://api.tiingo.com/tiingo"

frequency = "/daily/"

prices = "/prices?"

token { place your token here (from Tiingo and in quotes but no brackets) }

params = {

'token': token,

'startDate': start,

'endDate': end

}

tUrl = base_url + frequency + symbol + prices

response = requests.get(tUrl, params)

return response

# Create a function called retrieve_json_columns

def retrieve_json_columns(response):

json_response = response.json()

dates = []

closes = []

for json_object in json_response:

dates.append(json_object['date'])

highs.append(json_object['close'])

return dates, closes

# Now, call the first function, get_stock_data. Feel free to use different parameters

response = retrieve_historical_prices('MSFT', '2010-1-1', '2020-12-31')

# Convert the data into something more intuitive to use

dates, closes = retrieve_json_columns(response)

# Print the values returned

print (dates)

print (closes)

Next Steps

What you do with the data after you obtain it and convert it is up to you. But some suggestions would be to:

- graph the data,

- technical analysis signals

- rebalancing of portfolios

- export to flat files or spreadsheets

- use spreadsheet Python libraries for futher processing

Convert the code to pandas DataFrames. Experienced Python programmers already know about the library pandas. They wouldn't be experienced Python programmers without this library. Trust me on this one. These experienced programmers would also likely advise to turn the three arrays into a pandas DataFrame. I agree with doing this.

However, I wanted to keep the tutorial as simple as possible. Using pandas in this example would add further complications and it wouldn't necessarily add much to the lessons given.

Besides, when beginners become more experienced, they will naturally migrate towards learning pandas. It's such a important component that I am surprised the creators of Python haven't already integrated the library.

The point of all this is when you do get around to learning Python and get some experience, please change the code to place it in a DataFrame. This will make your life easier. Enough said about this topic!

DataFrames open up a whole set of options. In fact, many useful Python libraries may function only with DataFrames, and for good reason: they make everyone's life easier. With DataFrames, you can:

- work with charts easier

- load file formats using just one call

- save file formats using just one call

- Time series analysis

- Elementwise processing

- Subsetting

- Plus more...

All these functions are commonly used in financial markets. You can certainly work with financial data without DataFrames, but seriously! Why would you?

Other Considerations

First, let's get the ugly stuff out of the way. Data vendors (and not just financial ones) make every effort to ensure their data is accurate and timely. However, with free data the motivation is not the same as it is for paid subscriptions. These vendors often don't guarantee that free data is accurate, or even timely, for that matter.

Also, I am not offering any legal advice, but I have come to learn that consumers of data should be careful with how they use any data they obtain from external sources. Usually, they are not free to do whatever they please with it. All consumers of data should read the terms of service of any data provider and they should seek legal counsel if unsure.

Now for the good stuff! If you notice from the code above, processing API data was not overly complicated. When you work with other vendors that support REST API, the basic format of the code will remain the same. You read a URL in the agreed upon format (published by the vendor). Then, you process that request and convert it to the format that makes sense for how you'll use it.

Also, most data vendors using REST API are choosing to return data in JSON format. This tutorial has already shown you the basics of working with that format. So, you're good to go with this.

Web Scraping

An alternative to APIs is web scraping. You should never choose web scraping as the first choice if APIs are available. You may have no choice if APIs are not available for the data you are looking for. But if it is available, make it a rule to use the API over the scraping, unless there are reasons (like costs) that prevent you from using the API (even if it exists).

Web scraping can be a nightmare. You need to know where in the HTML code the tags that the scraping algorithms will use to extract the appropriate data.

Web scraping has legal and ethical ramifications of their own, including using someone else's bandwidth without their permission. You also use bandwidth when using REST APIs, but the vendor can set up limits to prevent you from using too much at any given time. You can usually increase those limits by paying for a subscription.

Web scraping causes headaches for the owners of websites, especially when people don't use it responsibly. They have to track down the culprits and then try to learn if the data is being used without permission, etc.

If you write web scraping algorithms to obtain data, expect vendors to change the web pages and render your scraping methods useless. This happens all the time, and yes, vendors do this on purpose. Sometimes, the changes are minor. But often, it is a complete reworking of the tags within the pages that you are targeting.

Conclusion

You have the basis for learning how to use REST APIs to obtain financial data. You may need to learn the basics of Python or at least, brush up on your skills. Python is one of the easier languages to learn, so don't get intimidated by the thought of having to learn a new computer language.